前言

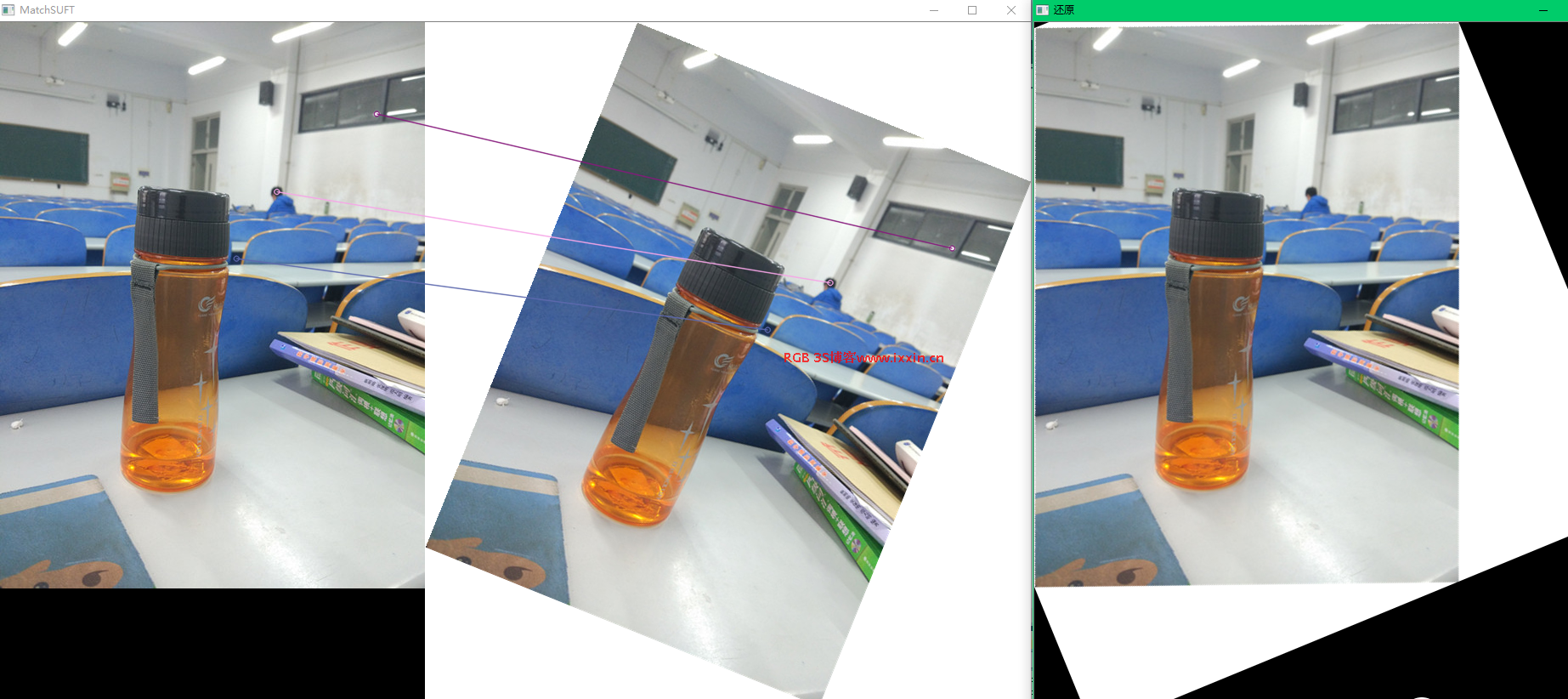

之前写过一个基于仿射变换,进行图像旋转的例子。今天这个名字比较绕,其实就是SUFT算子选点,然后进行点的匹配,基于匹配计算变换矩阵,最后基于变换矩阵进行图片纠正,是Opencv应用于遥感的简单小尝试。

代码

/*

作者:山科_xxin

时间:2017-03-27 22:53:11

功能:<a href="https://www.ixxin.cn/tag/opencv/" title="查看更多关于Opencv的文章" target="_blank">Opencv</a>基于SUFI算子选点,匹配,<a href="https://www.ixxin.cn/tag/%e4%bb%bf%e5%b0%84%e5%8f%98%e6%8d%a2/" title="查看更多关于仿射变换的文章" target="_blank">仿射变换</a>纠正图片

类别:<a href="https://www.ixxin.cn/tag/opencv/" title="查看更多关于Opencv的文章" target="_blank">Opencv</a>函数练习

*/

#include <iostream>

#include <string>

#include <opencv2\opencv.hpp>

#include <opencv2\highgui\highgui.hpp>

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/nonfree/nonfree.hpp"

#include "opencv2/legacy/legacy.hpp"

using namespace std;

using namespace cv;

using namespace std;

using namespace cv;

int maopao(vector< DMatch > matches)

{

int msize = matches.size();

float tmp;

for(int i = 0;i<msize-1;i++)

for(int j = 0;j<msize-1-i;j++)

{

if(matches[j].distance>matches[j+1].distance)

{

tmp = matches[j].distance;

matches[j].distance = matches[j+1].distance;

matches[j+1].distance = tmp;

}

}

for(int i = 0;i<msize;i++)

cout<<matches[i].distance<<" ";

return 0;

}

int main()

{

cout<<"SUFT算法:"<<endl;

Mat srcImage1 = imread("G:\\opencv\\b1.JPG",1);

Mat srcImage2 = imread("G:\\opencv\\b11.jpg",1);

if( !srcImage1.data || !srcImage2.data )

{

cout<<"打开错误"<<endl;

return false;

}

else

{

//if(srcImage2.data)

}

double t = getTickCount();

int minHessian = 700

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keyPoint1, keyPoints2;

detector.detect( srcImage1, keyPoint1 );

detector.detect( srcImage2, keyPoints2 );

cout<<"图像1特征点个数:"<<keyPoint1.size()<<endl;

cout<<"图像2特征点个数:"<<keyPoints2.size()<<endl;

SurfDescriptorExtractor extractor;

Mat descriptors1, descriptors2;

extractor.compute( srcImage1, keyPoint1, descriptors1 );

extractor.compute( srcImage2, keyPoints2, descriptors2 );

t = ((double)getTickCount() - t)/getTickFrequency();

cout<<"SIFT提取点算法用时:"<<t<<"秒"<<endl;

BruteForceMatcher< L2<float> > matcher;

std::vector< DMatch > matches;

matcher.match( descriptors1, descriptors2, matches );

maopao(matches);

float min_dist = 100;

float max_dist = -100;

for(int i = 0;i<matches.size();i++)

{

float dist = matches[i].distance;

if(dist < min_dist) min_dist = dist;

if(dist > max_dist) max_dist = dist;

}

cout<<"Match个数:"<<matches.size()<<endl;

cout<<"最小距离"<<min_dist<<endl;

cout<<"最大距离"<<max_dist<<endl;

Point2f srcTri[3];

Point2f dstTri[3];

for(int i = 0;i<3;i++)

{

dstTri[i] = Point2f(keyPoint1.at(matches[i].queryIdx).pt.x,keyPoint1.at(matches[i].queryIdx).pt.y);

srcTri[i] = Point2f(keyPoints2.at(matches[i].trainIdx).pt.x,keyPoints2.at(matches[i].trainIdx).pt.y);

}

vector<DMatch> goodMatches;

for(int i=0; i<3; i++)

{

goodMatches.push_back(matches[i]);

cout <<"第一个图中的"<< matches[i].queryIdx<<"匹配了第二个图中的"<<matches[i].trainIdx<<endl;

}

//cout<<"goodMatch个数:"<<goodMatches.size()<<endl;

/*

//限定点数

int point;

point = 30;

nth_element(matches.begin(), //初始位置

matches.begin() + (point-1), //排序元素的位置

matches.end()); //终止位置

//移除之后所有的元素

matches.erase(matches.begin() + point, matches.end());

*/

Mat imgMatches;

drawMatches( srcImage1, keyPoint1, srcImage2, keyPoints2,goodMatches, imgMatches,Scalar::all(-1),Scalar::all(-1),Mat(),2);//进行绘制

Mat warpMat( 2, 3, CV_32FC1 );

Mat dstImage_warp = Mat::zeros( srcImage2.rows, srcImage2.cols,srcImage2.type() );

warpMat = getAffineTransform( srcTri, dstTri);

warpAffine(srcImage2, dstImage_warp, warpMat, dstImage_warp.size());

namedWindow("MatchSUFT",1);

imshow("MatchSUFT", imgMatches);

imshow("还原",dstImage_warp);

waitKey(0);

return 0;

}

结果

有个缺点,就是我没有对黑边,白边进行处理,其实是可以让他居中的,应该可以的,别打脸。。。。